import requests

import time

import json

import datetime

from tqdm import tqdm

import random

import pandas as pd

from pandas import read_csv

import numpy as np

import seaborn as sns

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import MinMaxScaler, StandardScaler

from sklearn.metrics import mean_squared_error, r2_score

from sklearn.metrics import auc, recall_score, precision_score

from sklearn.metrics import precision_recall_curve, confusion_matrix

from sklearn.metrics import plot_precision_recall_curve

from sklearn.ensemble import RandomForestRegressor, AdaBoostRegressor

from sklearn.ensemble import GradientBoostingRegressor, ExtraTreesRegressor

from sklearn.tree import DecisionTreeRegressor

from sklearn.neighbors import KNeighborsRegressor

from sklearn.svm import SVR

from sklearn.linear_model import LinearRegression

import xgboost as xgb

from sklearn.model_selection import RandomizedSearchCV, GridSearchCV

from statsmodels.tsa.arima_model import ARIMA

from math import sqrt

import warnings

warnings.filterwarnings("ignore")

pd.options.display.max_columns = None

# pd.options.display.max_rows = None

from IPython.core.display import HTML

import pprint

def mean_absolute_percentage_error(y_true, y_pred):

"""Return mean absolute percentage error of predicted values."""

y_true, y_pred = np.array(y_true), np.array(y_pred)

return np.mean(np.abs((y_true - y_pred) / y_true)) * 100

font = "Roboto-Regular.ttf"

pp = pprint.PrettyPrinter(indent=4, width=100)

HTML('''

<style>

.output_png {

display: table-cell;

text-align: center;

vertical-align: middle;

}

</style>

<script>

code_show=true;

function code_toggle() {

if (code_show){

$('div.input').hide();

} else {

$('div.input').show();

}

code_show = !code_show

}

$( document ).ready(code_toggle);

</script>

<form action="javascript:code_toggle()"><input type="submit" value="Click here to toggle on/off the raw code."></form>

''')

Stock price prediction using various techniques is a classic problem that many researchers have been tackling for many years. Although efficient market hypothesis states that it is not possible to predict stock prices given its random nature, many still attempt to find patterns in price movements. Technical analysts take advantage of factors based on prices and volume to figure out potential opportunities to make money in the short- or long-run. In the Philippines, the Philippines Stock Exchange, Inc. (PSE) is the national stock exchange. In 2019, the PSE had a market capitalization of PhP 13.395 trillion with an average value turnover of PhP 7.29 billion.

In this study, the data on the stock price of Jollibee Food Corporation (stock code: JFC), which is a stock listed in PSE, from January 2012 to December 2019 were used to develop a model and a method that would improve the accuracy of predicting stock prices. The following results were highlighted in this study.

- Among the three models used in this study, Auto Regressive Integrated Moving Average (ARIMA), Gradient Boosting Regression (GBR) with naive forecasting of features and a combination of ARIMA and GBR, ARIMA gave the smallest error measurements (MSE, RMSE, MAPE and last point MAPE).

- The models considered were significantly improved by choosing a time interval as the train set which starts and ends at two different days, not necessarily consecutive, when the Relative Strength Index (RSI) touches the support level (RSI=30) from a declining trend.

Stock traders and investors: They can consider a different way of looking at moving average-based indicators as part of their decision when trading stocks.

Researchers: They can consider a rule-based selection of time intervals of stock prices when working with stock price prediction research.

# def get_stocks(stock_code, start_date, end_date):

# """

# Get stock info from start_date to end_date.

# PARAMETERS

# ----------

# stock_code : list

# List of stock codes to retrieve info of.

# start_date : str

# Start date of data with format 'YYYY-MM-DD'.

# end_date : str

# End date of data with format 'YYYY-MM-DD'.

# RETURN

# ------

# stocks : pandas.DataFrame

# DataFrame with stock info name-price-symbol-date.

# """

# dates = (pd.date_range(start=start_date,

# end=end_date).to_series()

# .apply(lambda x :

# x.strftime("%Y-%m-%d")))

# stocks = pd.DataFrame()

# for code in stock_code:

# for date in dates:

# data = ''

# wp_endpoint = ('http://phisix-api2.appspot.com/stocks/' + code

# + '.' + date +'.json')

# data = requests.get(wp_endpoint).text

# if data:

# stock = json.loads(data)

# stock_data = (pd.DataFrame(stock['stock'][0])

# .reset_index(drop=True)

# .drop(1)

# .drop('percent_change', axis=1))

# stock_data['date'] = date

# stocks = stocks.append(stock_data)

# # time.sleep(0.5)

# return stocks

# stock_code = ['JFC']

# start_date = '2012-01-01'

# end_date = '2019-12-31'

# stocks = get_stocks(stock_code, start_date, end_date)

# stocks.to_csv('jfc_stocks_data.csv', index=False)

df1 = pd.read_csv('jfc_stock_data.csv')

df = df1.copy()

def get_returns(df):

"""Returns the daily returns of stock price."""

df = df.reset_index(drop=True)

day1 = np.array([0])

rets = (df.price.diff()[1:].to_numpy() / df.price[:-1].to_numpy())

df['returns'] = np.concatenate((day1, rets))

return df[1:]

def compute_sma(stock):

"""Return SMAs."""

for d in [5, 8, 13, 21, 50, 100]:

stock['sma_' + str(d)] = stock.price.rolling(window=d).mean()

return stock

def compute_ema(stock):

"""Return EMAs"""

for d in [3, 9, 12, 16, 26, 200]:

stock['ema_' + str(d)] = (stock.price

.ewm(span=d, adjust=False)

.mean())

return stock

def compute_macd(stock):

"""Return MACD and MACD signal."""

stock['macd'] = stock.ema_12 - stock.ema_26

stock['macd_signal'] = stock.macd.ewm(span=9, adjust=False).mean()

return stock

def compute_rsi(stock, period=14):

"""Return RSI."""

close = stock.price

delta = close.diff()

gain, loss = delta.copy(), delta.copy()

gain[gain < 0] = 0

loss[loss > 0] = 0

# Calculate the exponential moving averages (EWMA)

roll_up = gain.ewm(com=period - 1, adjust=False).mean()

roll_down = loss.ewm(com=period - 1, adjust=False).mean().abs()

# Calculate RSI based on exponential moving average (EWMA)

# relative strength = average gain/average loss

rs = roll_up / roll_down

rsi = 100 - (100 / (1 + rs))

stock['rsi'] = rsi

return stock

def volume_minmax(stock):

"""Return MinMax scaled volume."""

scaler = MinMaxScaler()

stock['volume'] = scaler.fit_transform(stock[['volume']])

return stock

def compute_derived_features(stock):

"""Return DataFrame with all derived features."""

stock = compute_sma(stock)

stock = compute_ema(stock)

stock = compute_macd(stock)

stock = compute_rsi(stock)

stock = volume_minmax(stock)

return stock

def diff_mas(stock):

"""Return MA differences."""

smas = ['sma_5', 'sma_8', 'sma_13', 'sma_21', 'sma_50', 'sma_100']

emas = ['ema_3', 'ema_9', 'ema_12', 'ema_16', 'ema_26', 'ema_200']

for i in range(1, len(smas)):

for j in range(i):

stock[smas[i] + '_' + smas[j]] = ((stock[smas[i]] -

stock[smas[j]]) /

stock['price'])

stock[emas[i] + '_' + emas[j]] = ((stock[emas[i]] -

stock[emas[j]]) /

stock['price'])

return stock

The following data are included in this study.

| Data Field | Description |

|---|---|

| price | Daily closing price (PhP) |

| volume | Number of shares traded daily |

| date | Date of trading |

| returns | Daily percentage change |

| sma_n | n-day simple moving average (SMA-n) of prices |

| ema_m | m-day exponential moving average (EMA-m) of prices |

| sma_n_sma_m | Difference of SMA-n and SMA-m |

| ema_n_ema_m | Difference of EMA-n and EMA-m |

| macd | Moving average convergence-divergence; difference between EMA-12 and EMA-26 |

| macd_signal | EMA-9 of MACD |

| rsi | Relative Strength Index |

The stock price of Jollibee Food Corporation (JFC) from January 2012 to December 2019 are used in this study. The data were collected from Philippine Stock Exchange Composite Index RESTful API.

# Calculate daily returns of stock price

jfc = get_returns(df[df.symbol == 'JFC'])

# Calculate derived features

jfc = compute_derived_features(jfc)

jfc = jfc[100:].reset_index(drop=True)

# Calculate differences of smas and emas

smas = ['sma_5', 'sma_8', 'sma_13', 'sma_21', 'sma_50', 'sma_100']

emas = ['ema_3', 'ema_9', 'ema_12', 'ema_16', 'ema_26', 'ema_200']

jfc = diff_mas(jfc)

jfc = jfc.drop(smas + emas, axis=1)

jfc.head()

1. Dimensionality Reduction (PCA)¶

Before using the data to develop the model, the dimension (number of columns) of the data set was reduced using Principal Component Analysis (PCA). Retaining 99% of the variance explained, the top two principal components were used in the development of the model.

def pca(X):

"""Return rotated design matrix, new coordinate system and variance

explained.

"""

# Mean-center data set.

X_mc = X - np.outer(np.ones(X.shape[0]), X.mean(0))

eigval, eigvec = np.linalg.eig((X_mc.T).dot(X_mc))

eigval_srtd = eigval[np.argsort(eigval)[::-1]] / eigval.sum()

eigvec_srtd = eigvec[:, np.argsort(eigval)[::-1]]

# Plot variance explained.

variance_explained = eigval_srtd

plt.plot(range(1, len(variance_explained)+1), variance_explained, 'o-')

plt.ylim(0,1)

plt.xlabel('PC')

plt.ylabel('variance explained')

plt.show();

return X_mc.dot(eigvec_srtd), eigvec_srtd, variance_explained

def project_pca(stock, X_new, n_components=2):

"""Return DataFrame with the selected first few components."""

X_new = np.real(X_new)

# Get first n components

X_new1 = pd.DataFrame(X_new).iloc[:,:n_components]

X_new1.columns = ["PC" + str(x + 1) for x in range(n_components)]

# Replace with PCA

stock_new = stock.iloc[:,:6].merge(X_new1, left_index=True,

right_index=True)

return stock_new

stock = jfc.copy()

X_new, w, variance_explained = pca(stock.drop(['name', 'symbol', 'returns',

'volume', 'price', 'date'],

axis=1))

stock_new = project_pca(stock, X_new, n_components=2)

stock_new.head()

2. Model development¶

In this study, three models are considered in predicting the future stock price of JFC. A brief description is shown as follows.

- Gradient boosting regression and naive forecasting. Since the features are data that are not available prior to the closing price to be predicted, naive method of forecasting was applied on the features to determine their values in the next $n$ days, that is, the feature values of the last $n$ days were used to set the feature values of the next $n$ days. Using the predicted feature values, gradient boosting regression is used to predicted the stock price of JFC.

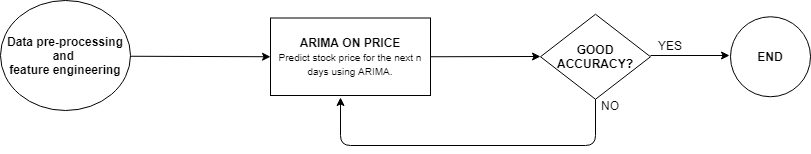

- ARIMA on stock prices. In this case, only the daily closing prices were used tp predict the stock price of JFC in the next $n$ days.

- ARIMA on the features and gradient boosting regression on the stock price. This case is similar to (1) except that we use ARIMA in predicting the feature values instead of the naive method of forecasting. Then, we use gradient boossting regression on the predicted feature values to predicted the stock price of JFC.

3. Train-test Interval selection¶

In selecting the time interval of the stock prices to be used in the training and testing of the model, two setups were considered to predict the closing price of JFC in the next 10 days.

Partitioned Intervals: In this setup, 15 intervals were considered with train size of 100. The starting days between intervals are spaced by 50 trading days.

RSI-based Intervals: In this setup, a train set/interval starts and ends at two different days when the RSI touches the support level (RSI=30) from a declining trend. These points of "RSI support kiss" need not be consecutive.

4. Model Accuracy Measurement¶

In determining which model and setup gave the best results, the following error metrics were used.

- Mean Squared Error (MSE)

- Root Mean Squared error (RMSE)

- Mean Absolute Percentage Error (MAPE)

- Last Point MAPE: MAPE of the nth (last) predicted price

Note that the goal here is to minimize these errors.

First, we plot the daily closing price of JFC from January 2012 to December 2019, alongside the corresponding daily RSI. Note that we have to show these plots since we will be needing this in selecting the RSI-based intervals for the second setup of the interval selection.

def regressor_model(stock, model_name, mod, num_preds, train_size):

"""Return performance of model using Naive method for predicting

features.

"""

stock = stock.drop(['name', 'symbol', 'date', 'returns', 'volume'],axis=1)

y_train = stock.price[ : train_size].to_numpy()

X_train = stock.drop('price', axis=1)[ : train_size].to_numpy()

y_test = stock.price[train_size : (train_size + num_preds)].to_numpy()

#Naive assumption

X_test = X_train[-num_preds:]

mod.fit(X_train, y_train)

y_pred = mod.predict(X_test)

y_pred_train = mod.predict(X_train)

plt.figure(figsize=(10,5))

plt.plot(range(len(y_pred)), y_pred, 'o-', color='red', label='Prediction')

plt.plot(range(len(y_test)), y_test, 'o-', color='blue', label='Actual')

plt.legend()

plt.show()

mse = mean_squared_error(y_test, y_pred)

rmse = sqrt(mean_squared_error(y_test, y_pred))

mape = mean_absolute_percentage_error(y_test, y_pred)

lastpoint_mape = mean_absolute_percentage_error(y_test[-1], y_pred[-1])

train_mse = mean_squared_error(y_train, y_pred_train)

train_rmse = sqrt(train_mse)

train_mape = mean_absolute_percentage_error(y_train, y_pred_train)

return pd.DataFrame({'Regression Method' : [model_name],

'Train MSE' : [train_mse],

'Train RMSE' : [train_rmse],

'Train MAPE' : [train_mape],

'Test MSE' : [mse],

'Test RMSE' : [rmse],

'Test MAPE' : [mape],

'Last point MAPE' : [lastpoint_mape]

})

# ARIMA

def difference(dataset, interval=1):

"""Return differenced series."""

diff = list()

for i in range(interval, len(dataset)):

value = dataset[i] - dataset[i - interval]

diff.append(value)

return np.array(diff)

def inverse_difference(history, yhat, interval=1):

"""Return invert-differenced value."""

return yhat + history[-interval]

def evaluate_arima_model(X, arima_order, num_preds, train_size):

"""Return RMSE of ARIMA model given order (p, d, q)."""

X = X.astype('float32')

train= X[ : train_size]

test = X[train_size : (train_size + num_preds)]

history = [x for x in train]

predictions = list()

for t in range(len(test)):

# Difference data

interval_diff = 1

diff = difference(history, interval_diff)

model = ARIMA(diff, order=arima_order)

model_fit = model.fit(trend='nc', disp=0)

yhat = model_fit.forecast()[0]

yhat = inverse_difference(history, yhat, interval_diff)

predictions.append(yhat)

history.append(test[t])

# Calculate error metrics

mse = mean_squared_error(test, predictions)

rmse = sqrt(mse)

return rmse

def evaluate_models(dataset, p_val, d_val, q_val, num_preds, train_size):

"""Return order (p,d,q) with the best ARIMA results."""

dataset = dataset.astype('float32')

best_score, best_cfg = float("inf"), None

for p in tqdm(p_val):

for d in d_val:

for q in q_val:

order = (p,d,q)

try:

mse = evaluate_arima_model(dataset, order, num_preds,

train_size)

if mse < best_score:

best_score, best_cfg = mse, order

except:

continue

# print('Best ARIMA%s RMSE=%.3f' % (best_cfg, best_score))

return best_cfg

def evaluate_best(dataset, p_val, d_val, q_val, num_preds, train_size):

"""Return error metric results of the best order (p,d,q) for ARIMA."""

arima_order = evaluate_models(dataset,

p_val, d_val, q_val,

num_preds, train_size)

X = dataset

X = X.astype('float32')

train= X[ : train_size]

test = X[train_size : (train_size + num_preds)]

history = [x for x in train]

predictions = list()

for t in range(len(test)):

# Difference data

interval_diff = 1

diff = difference(history, interval_diff)

model = ARIMA(diff, order=arima_order)

model_fit = model.fit(trend='nc', disp=0)

yhat = model_fit.forecast()[0]

yhat = inverse_difference(history, yhat, interval_diff)

predictions.append(yhat)

history.append(test[t])

# Calculate error

mse = mean_squared_error(test, predictions)

rmse = sqrt(mse)

mape = mean_absolute_percentage_error(test, predictions)

lastpoint_mape = mean_absolute_percentage_error(test[-1], predictions[-1])

plt.figure(figsize=(10,5))

plt.plot(range(len(predictions)), predictions, 'o-', color='red',

label='Prediction')

plt.plot(range(len(test)), test, 'o-', color='blue', label='Actual')

plt.legend()

plt.show()

return pd.DataFrame({'Regression Method' : ['ARIMA'],

'Test MSE' : [mse],

'Test RMSE' : [rmse],

'Test MAPE' : [mape],

'Last point MAPE' : [lastpoint_mape]

})

def get_best_prediction(dataset, p_val, d_val, q_val, num_preds, train_size):

"""Return predicted prices using ARIMA with best results."""

arima_order = evaluate_models(dataset, p_val, d_val, q_val,

num_preds, train_size)

X = dataset

X = X.astype('float32')

train= X[ : train_size]

test = X[train_size : (train_size + num_preds)]

history = [x for x in train]

predictions = list()

for t in range(len(test)):

# Difference data

diff = difference(history, 1)

model = ARIMA(diff, order=arima_order)

model_fit = model.fit(trend='nc', disp=0)

yhat = model_fit.forecast()[0]

yhat = inverse_difference(history, yhat, 1)

predictions.append(yhat)

history.append(test[t])

# Calculate sample error

mse = mean_squared_error(test, predictions)

rmse = sqrt(mse)

mape = mean_absolute_percentage_error(test, predictions)

return predictions

def regressor_model_plus(stock, model_name, mod, num_preds, train_size,

p_values, d_values, q_values):

"""Return error metric results of ARIMA + regressor combination."""

stock = stock.drop(['name', 'symbol', 'date'], axis=1)

features = stock.drop('price', axis=1).columns

feature_cols = stock.drop('price', axis=1)

y_train = stock.price[ : train_size]

X_train = stock.drop('price', axis=1)[ : train_size]

y_test = stock.price[train_size : (train_size + num_preds)].to_numpy()

# Perform ARIMA on each feature

X_test = pd.DataFrame()

for col in tqdm(features):

X_test[col] = get_best_prediction(feature_cols[col].values,

p_values, d_values, q_values,

num_preds, train_size)

mod.fit(X_train, y_train)

y_pred = mod.predict(X_test)

plt.figure(figsize=(10,5))

plt.plot(range(len(y_pred)), y_pred, 'o-', color='red', label='Prediction')

plt.plot(range(len(y_test)), y_test, 'o-', color='blue', label='Actual')

plt.legend()

plt.show()

mse = mean_squared_error(y_test, y_pred)

rmse = sqrt(mean_squared_error(y_test, y_pred))

mape = mean_absolute_percentage_error(y_test, y_pred)

lastpoint_mape = mean_absolute_percentage_error(y_test[-1], y_pred[-1])

return pd.DataFrame({'Regression Method' : [model_name],

'Test MSE' : [mse],

'Test RMSE' : [rmse],

'Test MAPE' : [mape],

'Last point MAPE' : [lastpoint_mape]

})

def plot_stock(X):

"""Display price-macd-rsi graph of a stock."""

fig, ax = plt.subplots(2, 1, figsize=(18, 12), sharex=True,

constrained_layout=True,

gridspec_kw={'height_ratios': [3, 1]})

# PRICE

ax[0].plot(range(len(X.price)), X.price)

ax[0].set_title("JFC Stock Price (Jan 2012 to Dec 2019)", fontsize=18)

ax[0].set_ylabel('Price', fontsize=15)

ax[0].grid(True)

# # MACD Signal

# ax[1].plot(range(len(X.macd_signal)), X.macd_signal)

# ax[1].set_title("MACD Signal", fontsize=18)

# ax[1].grid(True)

# ax[1].hlines(y=[0], xmin=-10, xmax=len(X), linestyles='dashed')

# RSI

ax[1].plot(range(len(X.rsi)), X.rsi)

ax[1].set_title("RSI", fontsize=18)

ax[1].set_xlabel('Day', fontsize=15)

ax[1].set_xticks(range(0, len(X), 10))

for tick in ax[1].get_xticklabels():

tick.set_rotation(90)

ax[1].grid(True)

ax[1].hlines(y=[30, 80], xmin=-10, xmax=len(X), linestyles='dashed')

ax

return None

def run_partitioned_intervals(X):

"""Return accuracy (error metrics) of model based on equally partitioned

data set.

"""

mod1 = GradientBoostingRegressor(n_estimators=100,max_depth=10)

num_preds = 10

train_size = 100

p_values = range(0, 2)

d_values = range(0, 2)

q_values = range(0, 2)

iters = 0

for start in tqdm(range(0, 750, 50)):

iters += 1

perf1 = regressor_model(X[start:], 'GBR', mod1, num_preds, train_size)

perf2 = evaluate_best(X.price[start:].to_numpy(), p_values, d_values,

q_values, num_preds, train_size)

perf3 = regressor_model_plus(X[start:], 'ARIMA + GBR', mod1,

num_preds, train_size, p_values,

d_values, q_values)

perf = pd.DataFrame(columns=['Model', 'No. of Intervals', 'Ave. MSE',

'Ave. RMSE', 'Ave. MAPE',

'Ave. Last Point MAPE'])

perf.loc[0] = ['GBR', iters] + perf1.mean()[-4:].tolist()

perf.loc[1] = ['ARIMA', iters] + perf2.mean()[-4:].tolist()

perf.loc[2] = ['ARIMA + GBR', iters] + perf3.mean()[-4:].tolist()

return perf

def run_rsi_based_intervals(X):

"""Return accuracy (error metrics) of model with train sets based on RSI

values.

"""

mod1 = GradientBoostingRegressor(n_estimators=100,max_depth=10)

rsi_bottoms = [60, 85, 145, 450, 517, 645]

num_preds = 10

p_values = range(0, 2)

d_values = range(0, 2)

q_values = range(0, 2)

iters = 0

for i in tqdm(range(1, len(rsi_bottoms))):

for j in range(i):

iters += 1

train_size = rsi_bottoms[i] - rsi_bottoms[j]

start = rsi_bottoms[j]

perf1 = regressor_model(X[start:], 'GBR', mod1, num_preds,

train_size)

perf2 = evaluate_best(X.price[start:].to_numpy(), p_values,

d_values, q_values, num_preds, train_size)

perf3 = regressor_model_plus(X[start:], 'ARIMA + GBR', mod1,

num_preds, train_size, p_values,

d_values, q_values)

perf = pd.DataFrame(columns=['Model', 'No. of Intervals', 'Ave. MSE',

'Ave. RMSE', 'Ave. MAPE',

'Ave. Last Point MAPE'])

perf.loc[0] = ['GBR', iters] + perf1.mean()[-4:].tolist()

perf.loc[1] = ['ARIMA', iters] + perf2.mean()[-4:].tolist()

perf.loc[2] = ['ARIMA + GBR', iters] + perf3.mean()[-4:].tolist()

return perf

plot_stock(jfc)

Observe that the "RSI support kiss" points happened on days 60, 85, 145, 450, 517 and 645. With these points, we can form 15 intervals (days 60 to 85, days 60 to 145, etc). Although there are other such points beyond day 645, we only considered these points to minimize the run-time of the Python program.

# Load data to X

X = stock_new.copy()

1. Partitioned Intervals¶

Looking at the results of the partitioned intervals setup, it turns out that ARIMA gave the best results across all error metrics. On the other hand, one of the possible reasons that ARIMA + GBR gave a bad result is the possibility of the error being multiplied when the two models were implemented sequentially. Another possible reason is that the parameters used in implementing GBR might not be applicable to some of the intervals, and hence increasing the mean error.

partition_perf = run_partitioned_intervals(X)

partition_perf

2. RSI-based Intervals¶

In this setup of selecting intervals based on "RSI support kiss" points, the model accuracy improved significantly across all error metrics. Particularly, there is a great improvement on the models that performed badly in the partitioned intervals setup.

rsi_perf = run_rsi_based_intervals(X)

rsi_perf

Summary¶

Based on the models used in this study - (1) gradient boosting regression and naive forecasting, (2) ARIMA, and (3) gradient boosting regression and ARIMA - ARIMA gave the best results in terms of predicting stock prices.

By introducing a way of selecting an interval to consider in training a model based on RSI, the accuracy of the models in predicting the price of a stock was improved significantly.

Recommendation:¶

- To further validate the results in this study, the model can be used in other stocks.

- Considering the idea that it takes time for a stock to reach an RSI of 30, other RSI levels can be tested in the method done in this study.

- Observing how the selection of interval significantly improved the performance of the model, other technical indicators can be explored and incorporated in the selection of the interval where the model will be trained.

[1] Patel, J., Shah, S., Thakkar and P., Kotecha, K. (2015). Predicting stock market index using fusion of machine learning techniques. Expert Systems with Applications, 42, 2162–2172

[2] Cam, J. (2015). The Trading Code. Laguna: Lex Media Digital Corp.

[3] Hyndman, R.J. and Athanasopoulos, G. (2018). Forecasting: principles and practice, 2nd edition, OTexts: Melbourne, Australia. OTexts.com/fpp2. Accessed on August 25, 2020.

[4] Gonzales, I. (2020). Market on short-lived high, but investors optimistic for 2020. Philstar Global. Retrieved from: https://www.philstar.com/business/2020/01/01/1981088/market-short-lived-high-investors-optimistic-2020

! jupyter nbconvert --to html Corro_MLIndivProject.ipynb